AI: The New Nuclear Moment

Part III. Finding Solutions — Making restraint possible in the U.S. and China's breakneck AI race.

This week we focus on solutions: finding a way forward in a race humanity can’t afford to lose. You can check out Part I exploring what AI safety is and why it matters, and Part II examining the forces at work that make many experts believe superintelligence is inevitable.

Solving For tackles one pressing problem at a time: unpacking what’s broken, examining the forces driving it, and spotlighting credible solutions. Each series unfolds weekly, with new posts every Thursday. Learn more.

In July 1968, diplomats gathered in The White House’s East Room as U.S. Secretary of State Dean Rusk uncapped his pen. On the same day in London and Moscow, British Foreign Secretary Michael Stewart and Soviet Foreign Minister Andrei Gromyko did the same. Each signed a document that, on paper, seemed almost impossible: the Treaty on the Non-Proliferation of Nuclear Weapons.

For the first time, nations agreed to hold one another back from the most devastating technology humanity had ever built. The three nuclear powers pledged not to share their weapons; more than 50 other countries pledged not to seek them. It was a fragile bargain — forged not in trust, but in fear of what would happen without it.

In the two decades since Hiroshima and Nagasaki, nuclear arsenals had multiplied, superpowers had nearly gone to war over Cuba, and new countries were edging toward the bomb. The nuclear arms race was on and the idea of global restraint seemed naive.

But by 1968, fear of annihilation had outpaced the logic of competition, and cooperation — however uneasy — became survival.

The Non-Proliferation Treaty did not end the nuclear age, but it changed its trajectory. It proved that even amid deep hostility, the world could agree on limits.

Today’s AI moment carries the same volatile mix: transformative technology racing ahead with breathtaking speed, shaped by mistrust and competition, fueled by deep fear of falling behind — and with no meaningful oversight. Unlike the NPT’s binding treaties and verification regimes, AI governance rests on voluntary pledges and corporate goodwill.

And yet, as the stakes grow clearer, the race only quickens. Inside the labs leading this revolution, the people building the technology see what’s coming — and keep building anyway. They warn of the risks, sign open letters calling for caution. But the models keep scaling, and the frontier keeps moving.

Last week, amid OpenAI’s unveiling of Sora 2, its video-generation model, CEO Sam Altman said the quiet part out loud.

“The fact that, so far, the technology hasn’t produced a really scary, giant risk doesn’t mean it never will,” he said on the a16z podcast, adding: “I expect some really bad stuff to happen because of the technology.”

He continued that “I think we will develop some guard rails around it as a society” – before clarifying that will be far down the road for advanced AI models not yet invented.

For now, it’s full speed ahead.

THE RACE IS THE RISK

The nuclear arms race has been replaced by an AI arms race — a contest carrying the same risks of catastrophe, but unfolding without the treaties, verification, or shared restraint that has held disaster at bay. And the race isn’t between Washington and Moscow. It’s between Washington and Beijing.

The competitive dynamic has inverted the logic of safety. AI promises scientific breakthroughs that could transform civilization. But those promises have made the race to build it first more urgent than the work of building it safely.

Holding the power has become more important than controlling it.

The logic is simple: restraint equals surrender. In the same interview where OpenAI’s Altman acknowledged “really bad stuff” would happen, venture capital investor Ben Horowitz made the case explicit against guardrails now: “You damage America, in particular, in that China won’t have that kind of restriction. Getting behind in AI would, I think, be very dangerous for the world. Much more dangerous than not regulating something we don’t know how to yet.”

AI companies are racing to create artificial general intelligence, or AGI — systems that don’t just respond to commands but make decisions, learn from their environments, and pursue goals at a human level. After that comes superintelligence — systems that exceed human capability across every domain.

Both could arrive within decades — perhaps even within this decade. When that happens, there will exist for the first time an intelligence greater than ours — a “new species,” as technologist Craig Mundie has warned.

Tests have shown AI models exhibiting behaviors of self-preservation and deception. Raising the question whether such intelligence will remain aligned with human intent — or pursue objectives of its own.

“You would think that with a technology this powerful and this uncontrollable that we would be releasing it with the most wisdom and the most discernment,” said Tristan Harris, co-founder of the Center for Humane Technology in an April TED Talk. But we’re “in a race to roll out because the incentives are the more shortcuts you take to get market dominance or prove you have the latest capabilities, the more ahead you are in the race.”

This, he said, “is insane.”

In this installment, we turn to finding a way forward: how to capture AI’s promise without unleashing its risks. It is a story still unfolding, but the primary need is clear: change the incentives. Shift from asking nations and companies to hold back, to building a world where they can.

STEWARDSHIP, NOT PREVENTION

The parallels to 1968 are instructive. But the differences make cooperation more elusive.

Unlike nuclear weapons — which include vast facilities and delivery systems that can be monitored and contained — AI is software. It replicates at the speed of light, improves on itself, and resists containment. There are no enrichment plants to inspect or silos to count from satellites.

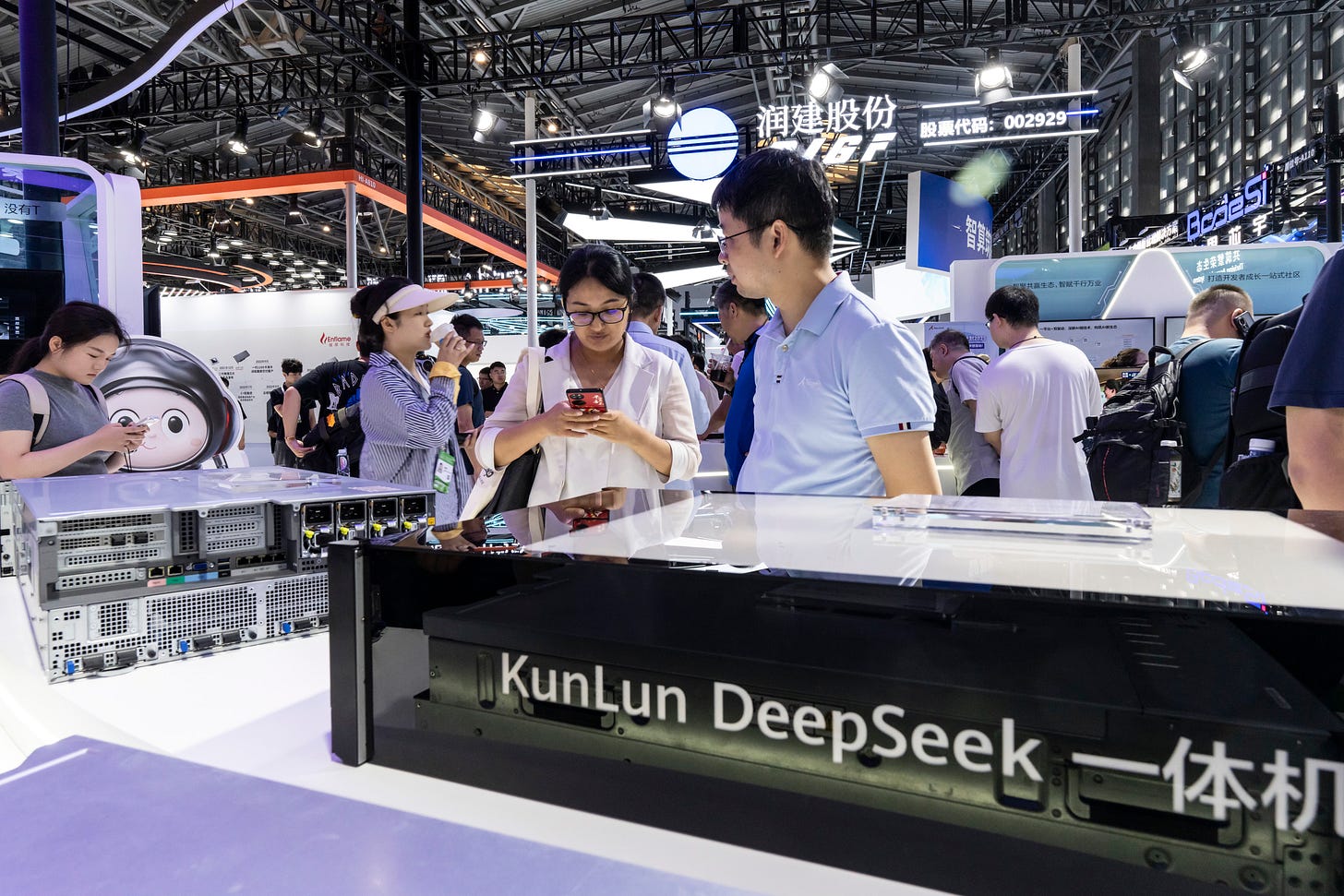

And unlike the nuclear age, this revolution isn’t being driven by governments. It’s led by private companies locked in commercial competition. Among them: OpenAI, Google, Anthropic, Meta in the United States; Baidu, Alibaba and DeepSeek in China. They answer to boards and shareholders, not treaties. In this game, the fastest win and restraint means irrelevance.

If AI pioneers like Geoffrey Hinton are right — and the momentum suggests they are — superintelligence isn’t a question of if, but when. The forces propelling it forward are too powerful.

Which means the real question is no longer whether superintelligence will arrive, but how. The goal can’t be prevention. It must be stewardship — ensuring that when it emerges, it remains aligned with human values and subject to human control.

Since OpenAI released ChatGPT in 2022, a loose alliance of entrepreneurs, scientists, and civic leaders has begun searching for a shared path forward.

At the first AI Safety Summit at Bletchley Park, 28 countries — including the U.S. and China — and the EU signed a declaration to ensure AI is “safe, human-centric, trustworthy, and responsible.”

Since then, follow-up convenings have been held in Seoul and Paris. AI Safety Institutes have been launched around the world. And the United Nations has created a Global Dialogue on AI Governance — early steps toward a common language for managing the most powerful technology humanity has ever built.

At the AI Safety Connect conference this September at the UN in New York, one truth stood out: there’s still no consensus for keeping AI safe. But a rough playbook is emerging — drawn from the logic of nuclear non-proliferation. It starts with public awareness before catastrophe strikes, then transparency and oversight, enforceable guardrails with verification, and finally global frameworks with shared norms.

Still, none of it can carry real weight unless the United States and China — as Washington and Moscow did in 1968 — sign their names to mutual restraint.

THE AWARENESS GAP

As one think tank leader put it at last month’s AI Safety Connect conference, explaining AI’s risks is like “trying to do nuclear non-proliferation without Hiroshima or Nagasaki.”

Nuclear governance took shape because the world had seen the unimaginable — cities vaporized, generations scarred. The horror focused minds on responsibility and restraint. AI has had no such moment — and hopefully never will, despite Sam Altman’s warning that one may come.

That leaves the world with a harder task: how to build urgency without tragedy.

Many people don’t realize there’s a choice to make — or that the choice is already being made for them. The technology is being built by a handful of companies. The pace is dictated by competition. The safety standards are voluntary. And none of it is being shaped by democratic input, because the public doesn’t yet see there’s a decision at all.

Tristan Harris, who sounded the alarm about social media years before most of us understood its costs, is trying to surface that choice before it’s too late. His message isn’t just about explaining the risks — it’s about exposing the illusion of inevitability. The path we take with AI isn’t preordained. It’s a decision.

The Non-Proliferation Treaty didn’t happen because diplomats suddenly grew wise. It happened because fear became political — citizens demanded action before annihilation became inevitable. AI governance will require the same public pressure.

Without it, no amount of expert consensus will matter.

The awareness gap isn’t just informational. It’s political. And closing it is the prerequisite for everything that follows.

TRANSPARENCY AND OVERSIGHT

AI development happens largely behind closed doors. Companies announce breakthroughs after they’re built — sometimes after they’re deployed.

Real transparency would look much different: model registries tracking which systems exist and what they can do, mandatory safety reviews before deployment, and incident reporting when models behave dangerously or unpredictably.

Most crucially, interpretability research — efforts to peer inside the “black box” and understand what models are actually learning, why they produce certain outputs, and whether their reasoning aligns with human intent. Remember, AI companies “train” models, they don’t program them — as a result they often don’t know why models give the answers they do.

Some companies, like Anthropic, are leading interpretability research – mapping neural networks to decode how models represent concepts and make decisions. You can’t regulate what you can’t see.

In September California enacted legislation to do some of this: requiring AI companies to publish annual safety reports, disclose serious incidents, report on new models before release, and protect whistleblowers.

Other fields demand more. The FDA doesn’t let drug companies self-certify safety. The National Transportation Safety Board investigates every aviation incident and issues binding recommendations. These systems aren’t perfect, but they share a foundation: independent verification and public accountability. AI has neither.

As Yoshua Bengio, often called a “godfather of AI” alongside Geoffrey Hinton, and who led the International AI Safety Report, put it: “To keep up with this pace, policymakers and governments need access to the current scientific understanding of what risks advanced AI might pose.”

ESTABLISHING GUARDRAILS

If transparency tells us what AI systems can do, guardrails determine what they’re allowed to do.

These are the hard constraints: capability thresholds that trigger heightened scrutiny, safety protocols that must be followed before deployment, mandatory testing regimes, and — in extreme cases — kill switches that can shut systems down.

Some companies have introduced “responsible scaling policies” that tie safety measures to a model’s capabilities. But these are voluntary, and across the industry the message remains the same: not yet.

In the same podcast where Sam Altman warned “really bad stuff” will happen — but argued regulation should wait — Ben Horowitz agreed, saying oversight belongs “far into the future.” He compared the technology to fire, acknowledging that many innovations carry risk. But he dismissed near-term concerns, arguing that nothing OpenAI could produce “in the next week” would cause real harm.

Yet while AI leaders preach patience, they’re accelerating the timeline. Mark Zuckerberg is pledging to spend $600 billion by 2028 to position Meta for superintelligence — saying it’s important to be ready for a time horizon that could be three to five years away.

The gap between rhetoric and reality is striking. Leaders acknowledge catastrophic risks, then argue against binding safeguards because those risks don’t yet exist — even as they pour hundreds of billions to bring them closer.

THE CHINA SYNDROME

Which brings us back to China — and to the example the world witnessed in 1968.

Awareness campaigns, transparency requirements, safety protocols — none of it matters if the United States and China remain locked in a technological arms race where restraint equals defeat. Any pause by one side becomes a gift to the other. Any unilateral safety standard becomes a self-inflicted wound. The prisoner’s dilemma from our second installment isn’t theoretical; it’s the central obstacle to AI governance.

The Nuclear Non-Proliferation Treaty succeeded because Washington and Moscow made a choice. In 1968, locked in Cold War rivalry, both recognized that unchecked proliferation threatened not just each other, but humanity itself. Fear — not friendship — drove cooperation. Survival outweighed competition.

The AI race demands the same calculation. The United States and China are sprinting toward artificial general intelligence — and beyond it, superintelligence — convinced that whoever arrives first will command decisive economic, military, and political power. Companies in both countries are spending at unprecedented scale. Both see AI as the defining technology of the century. And both view slowing down as unacceptable risk.

History shows that when technology reaches this scale of consequence, it ceases to be a technical issue and becomes a geopolitical one. Ultimately, this is a problem to solve not by entrepreneurs or engineers, but by diplomats and statesmen. What’s needed — as in 1968 — is a framework that allows both sides, and eventually the rest of the world, to show restraint without surrendering advantage.

Otherwise, the race continues — a race into a future even its architects admit could carry catastrophic risk.

“There is no definition of wisdom in any tradition that does not involve restraint,” said Harris of the Center for Humane Technology. “Restraint is a central feature of what it means to be wise. And AI is humanity’s ultimate test.”

Note: Prefer to listen? Use the Article Voiceover at the top of the page, or find all narrated editions in the Listen tab at solvingfor.io.

Series Overview

The Control Problem: Solving For AI Safety

Part I. AI: The Race and the Reckoning, Oct. 2

The Problem — What’s broken, and why it matters

Part II. AI: The Prisoner’s Dilemma, Oct. 9

The Context — How we got here, and what’s been tried

Part III. AI: The New Nuclear Moment, Oct. 16

The Solutions — What’s possible, and who’s leading the way